PAST RESEARCH

The Graphics and Virtual Reality group has been around since 1978 at UNC Chapel Hill, under various names: Pixel-Planes, Tracker, Ultrasound, Office of the Future, as part of BeingThere Centre, and others. Our group has been interested in such a variety of topics over the years that we've had many different names for our projects and web pages.

This list is not exhaustive. See our publications page for a complete list of research output.

HISTORICAL WEB PAGES

RESEARCH PROJECTS

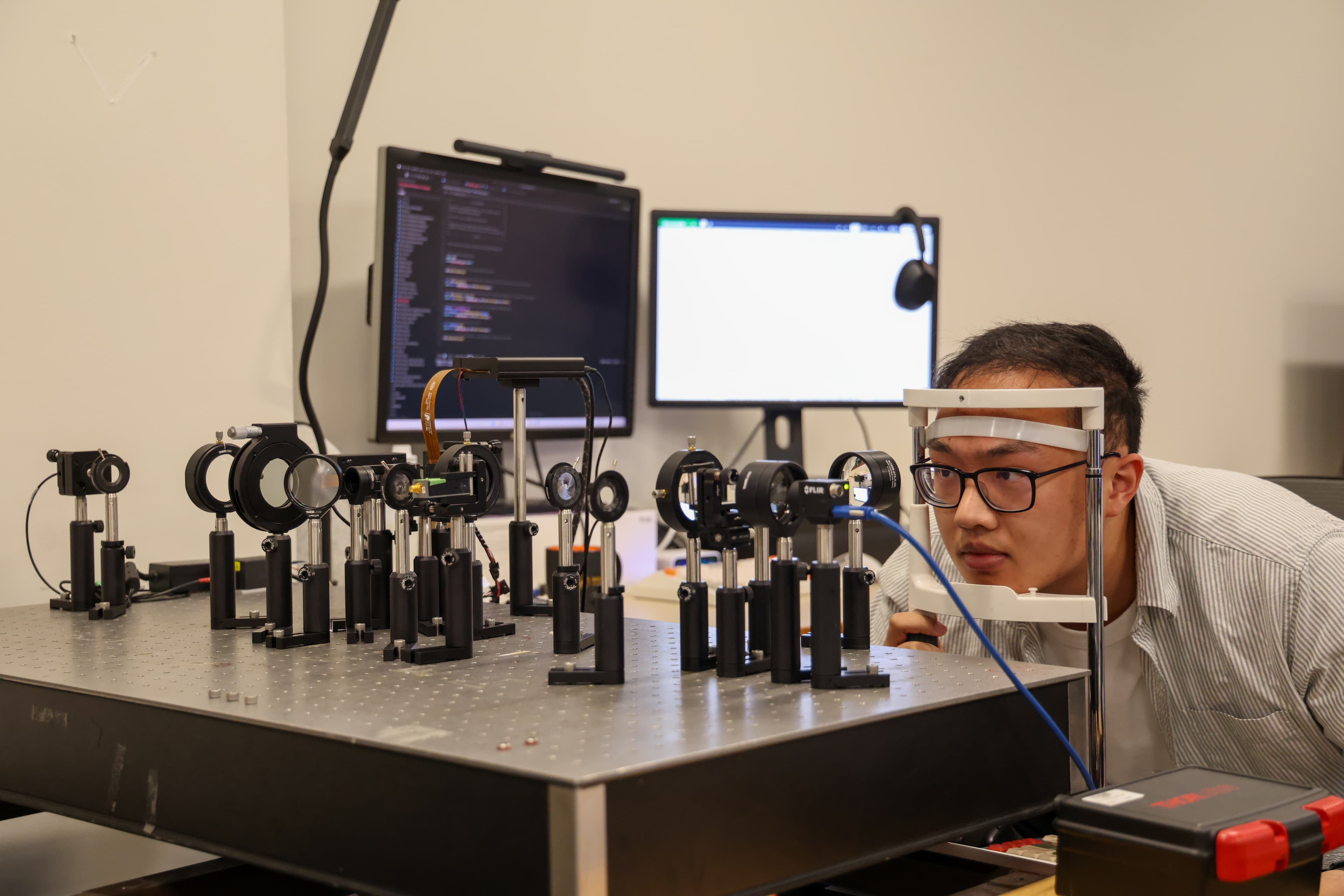

Dynamic Focus Augmented Reality Display

Tackled fundamental limitations of existing near-eye displays for augmented reality, including limited field of view, low angular resolution, and fixed accommodative state. Proposed a new hybrid hardware design for NEDs using see-through deformable membrane mirrors to address the Vergence-Accommodation Conflict.

Learn moreImmersive Learning from 3D Reconstruction of Dynamic Scenes

Investigated 3D reconstruction of room-sized dynamic scenes containing moving humans and objects with significantly higher quality and fidelity than previously possible. Enabled immersive learning of rare and important situations through post-event, annotated, guided virtual reality experiences such as emergency medical procedures.

Learn moreLow Latency Display

Developed a low latency tracking and display system suitable for ultra-low latency, optical see-through, augmented reality head-mounted displays. The display ran at a very high frame rate, performing in-display corrections on supplied imagery to account for the latest tracking information.

Learn morePinlight Display

Presented a novel design for an optical see-through augmented reality display offering a wide field of view in a compact form factor approaching ordinary eyeglasses. Demonstrated a real-time prototype display with a 110-degree diagonal field of view.

Office of the Future

Combined computer vision and computer graphics for panoramic image display, tiled display systems, image-based modeling, and immersive environments. Goals included a better everyday graphical display environment and 3D tele-immersion capabilities allowing distant people to share a virtual office space.

Learn more3D Telepresence for Medical Consultation

Developed and tested 3D telepresence technologies for remote medical consultations between an advising healthcare provider and a distant advisee. Focused on real-time acquisition and novel view generation, network congestion handling, and tracking and displays for producing accurate 3D depth cues and motion parallax.

Learn moreWall-to-Wall Telepresence

Enabled visualization of large 3D models on a multi-screen 2D display for telepresence applications. Experimented with large datasets such as reconstructed models of the Roman Colosseum and dense indoor room models, finding the visualization convincing for single users.

Multi-view Teleconferencing

Developed a two-site teleconferencing system supporting multiple people at each site while maintaining gaze awareness among all participants, providing unique views of the remote sites to each local participant.

Avatar Telepresence

Developed a one-to-many teleconferencing system in which a user inhabits an animatronic shader lamps avatar at a remote site, allowing the user to maintain gaze awareness with people at the remote location.

Ultrasound / Medical Augmented Reality

Developed a system allowing physicians to see directly inside a patient using augmented reality. Combined ultrasound echography imaging, laparoscopic range imaging, video see-through head-mounted displays, and high-performance graphics to create merged real and synthetic images.

Learn morePixel-Planes & PixelFlow

Explored computer architectures for 3D graphics offering dramatically higher performance with wide flexibility since 1980. Provided useful systems for researchers in medical visualization, molecular modeling, and architectural design exploration requiring graphics power far beyond commercially available systems.

Learn moreTelecollaboration

Developed a distributed collaborative design and prototyping environment where researchers at geographically distributed sites could work together in real time. Embraced both desktop and immersive VR environments with shared virtual environments, collaborative VR, modeling and interaction, scene acquisition, and wide field of view tracking.

Learn moreImage-Based Rendering

Investigated representing complex 3D environments with sets of depth-enhanced images rather than geometric primitives. Developed algorithms for processing depth-enhanced images to produce new images from arbitrary viewpoints not included in the original image set.

Learn moreWide-Area Tracking

Developed wide-area systems for 6D tracking of heads, limbs, and hand-held devices for head-mounted and head-tracked stereoscopic displays, providing users with the impression of being immersed in a simulated three-dimensional environment.

Wide Area Visuals with Projectors

Developed a robust multi-projector display and rendering system that was portable and rapidly deployable in geometrically complex display environments. Achieved seamless geometric and photometric image projection with continuous self-calibration using pan-tilt-zoom cameras.

Learn more3D Laparoscopic Visualization

Designed and prototyped a three-dimensional visualization system to assist with laparoscopic surgical procedures. Combined 3D visualization, depth extraction from laparoscopic images, and six degree-of-freedom tracking to display merged real and synthetic imagery in a surgeon's video-see-through head-mounted display.

Learn moreDesktop 3D Telepresence

Research into desktop-based 3D telepresence systems enabling remote collaboration with realistic depth cues and spatial awareness.

Egocentric Body Pose Tracking

Developed techniques for tracking body pose from an egocentric (first-person) perspective, with applications in virtual reality and human-computer interaction.

Eye Tracking with 3D Pupil Localization

Advanced eye tracking methods using 3D pupil localization for improved accuracy in gaze estimation and near-eye display applications.

Holographic Near-Eye Display

Research into holographic techniques for near-eye displays, enabling more natural and comfortable augmented reality experiences with proper focus cues.

Novel View Rendering using Neural Networks

Explored the use of neural networks for generating novel viewpoints of scenes, combining deep learning with traditional rendering techniques for high-quality view synthesis.